From the pre-checked boxes that subscribe you to a newsletter, to the disguised fine print that gives a company permission to sell your personal information, Dark Patterns are everywhere.

When talking about a website, the phrase “bad design” may conjure up images of terrible color schemes or mismatched fonts, but from a privacy and security perspective, “bad design” takes on a whole new meaning.

Dark Patterns are purposefully designed tricks used within the interfaces of websites and apps crafted to nudge you into signing up for or purchasing things you didn’t mean to. During their ongoing investigation into Dark Patterns, researchers at Purdue University identified five strategies that most Dark Patterns fall under: Nagging, Obstruction, Sneaking, Interface Interference and Forced Action.

Below are some of the most common examples of Dark Patterns for each of the five strategies and their sub-types.

Nagging: A redirection of expected functionality that persists over more than one interrelation or when a desired task is interrupted by other tasks not directly related to the one the user is focusing on. One example of this is the prompt for enabling notifications in the Instagram app, where the only two options are “Not Now” and “OK”, giving the user no ability to discontinue notifications. Other examples of this Dark Pattern include pop-ups that hide the interface, auto-play audio and video.

.avif)

Obstruction: Making an interaction more difficult than it needs to be in order to prevent an action.

Brignull’s “Price Comparison Prevention” - This Dark Pattern purposefully makes comparing the prices of products and services difficult. Tactics include hiding model numbers/product IDs and preventing important product information on the website from being copied to stop users from pasting the information into a search bar or different website.

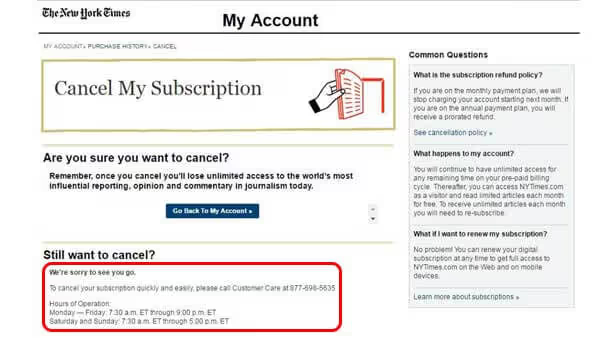

Brignull’s “Roach Motel” - A situation that is easy to get into, but hard to get out of. This usually occurs when a user is easily able to sign up for a service, but closing an account is difficult (or in some cases impossible.) This Dark Pattern typically requires a user to call to cancel the account, where they are further pressured to maintain the account (if you’ve ever tried to cancel SIRIUS XM, you’ve experienced this first hand.) Another example is The New York Times, where in order to cancel an online subscription, users are required to call during specified business hours.

Intermediate Currency – Users are required to spend real money to purchase a virtual currency to use a service or purchase goods. Most often seen as video games and in-app purchases for mobile games, the aim is to disconnect users from the real dollar value spent, which can result in users spending the virtual currency differently than they would with real money.

Sneaking: An attempt to hide, delay or disguise information that is relevant to the user, in order to make a user perform an action they may object to if they had knowledge of it.

One example of sneaking comes from Salesforce.com, which requires the user to consent to a privacy statement before they can unsubscribe from an email newsletter. This privacy statement allows for Salesforce to sell the user’s information to other countries.

Brignull’s “Forced Continuity” – If you ever signed up for a free trial that requires a credit card, forget the expiration date and been automatically charged and signed up – you’ve experienced forced continuity. This Dark Pattern takes advantage of users’ forgetting to check and keep up with expiration dates.

Brignull’s “Hidden Costs” – A late or obscured disclosure of certain costs. In this Pattern, the advertised price is changed late in the transaction due to taxes, fees, limited time conditions or outrageous shipping costs. An example of this can be found on the website for the Boston Globe, where a site wide banner claims a user can subscribe for 99 cents a week, but if you follow the process to subscribe, it is revealed that the advertised pricing only lasts for four weeks.

Brignull’s “Sneak into Basket” – This Dark Pattern sneaks an additional item or items to a user’s online shopping cart, often claiming to be a suggestion based on other items purchased by the user. An example of this can be found when purchasing a domain from godaddy.com. The selection page shows pricing for one year of domain registration, but after selecting the domain and proceeding to the shopping cart page, the price is inflated due to additional items that require specifically opting out being snuck into the basket: 2 years of domain registration instead of one year as showing on the selection page.

Brignull’s “Bait and Switch” – This pattern makes it appear that a certain action will cause a certain result, only to have it cause a different, likely undesired result. One example of this is the “X” button of a pop up performing any action other than closing a pop-up window. Another example found in the mobile game “Two Dots”, where the buy more moves selection button is moved to position to where the button to start a new game is normally positioned. This manipulation of muscle memory increases the likely of being accidentally triggered by the user.

Interface Interference: Any manipulation of the user interface that favors specific actions over others, confuses the user or limits the discovery of possibilities of an important action.

Hidden Information – Options or actions relevant to the user that are not made readily accessible. This Dark Pattern attempts to disguise relevant information as irrelevant and can manifest as content or options hidden in fine print, a terms and conditions statement or discolored text. An example of the latter is when the text or link to unsubscribe from a newsletter or mailing list is colored the same as the background in attempt to make it more difficult to see.

Preselection – Any situation where an option is selected by default before any user interaction. For example, when Twitter recently updated their email notifications, they automatically opted all users into receiving e-emails a for several items, including “Top Tweets and Stories” activity.

Aesthetic Manipulation – Any design crafted to focus a user’s attention on one thing in order to distract or convince a user. This Dark Pattern has four specific subtypes:

Toying with Emotion – Use of color, language or style to persuade a user into an action. Two examples of this can be found when trying to deactivate a Facebook account.When starting the process, users are shown friends that “would miss them” and tries to convince the user to stay by offering a counter argument to whatever reason they selected for deactivating their account.

False Hierarchy – Giving one or more options to a visual or interactive interface in order to convince a user to make a selection. For example, when trying to unsubscribe from Yahoo’s newsletter, the design encourages users to click a large, blue “no,cancel” button. In order to actually cancel the subscription, the user must select one of the small, light gray text options under the large blue button.

Brignull’s “Disguised Ad”– Ads disguised as interactive games, download buttons or other prominent interaction or information a user is looking for.

Brignull’s “Trick Questions” – Use of confusing wording,double negatives or leading language to manipulate user interactions. This Dark Pattern is commonly seen when registering with a service, where check boxes are shown, but their meaning is alternated, so the first choice means opt out and the second choice means opt in.

Forced Action: Any situation in which users are required to perform a specific action to access or continue to access functionality

An example of Forced Action is something most of us are familiar with - shutting down a PC running the Windows 10 operating system. When there is a system update available, the choices are “Update and Shut Down” and “Update and Restart”, leaving no choice but to proceed with the update.

The earlier example of sneaking illustrated on Salesforce.com is also part of this Dark Pattern since the user must agree to allow the site to sell their information to other countries in order to access functionality (in the case, unsubscribing).

Social Pyramid/Friend Spam – Commonly used in social media applications and online games, this Dark Pattern incentivizes or requires users to recruit others in order to use a service. The Farmville app provides a great example of both social pyramid/friend spam and the final sub type Gamification, by pressuring users to invite friends because certain goals and features within the game are useless or inaccessible without online friends also playing.

Gamification – Certain aspects of a service can only be “earned” through repeated use of aspects of the service. For example, the mobile game Candy Crush Saga occasionally gives players levels that are impossible to complete in order to urge them into purchasing extra lives or power ups. If the player does not purchase anything from the game, it will slowly revert in difficulty in order to keep users playing.

The Federal Trade Commission Act regulates the use of deceptive online marketing, advertising and sales - but unfortunately has no legal authority to regulate companies that use Dark Patterns to trick and deceive users. Although there is no clear solution to the Dark Pattern issue, raising awareness is key and the ability to spot Dark Patterns can go a long way in helping you avoid them (and the companies that use them).

If you are interested in joining the fight against these deceptive design tricks, be sure to visit the Dark Patterns website created by Harry Brignull - which he founded to "shame and name" websites and companies that use Dark Patterns.

.svg)